Anatomy of a Scenario

Recently I shared a short scenario I made, involving an e-scooter, a crowd, a bus and an unfortunate ending. It’s definitely not a usual scenario from our “driving-oriented” perspective, so I thought it’d be interesting to explain how it came to life.

Scenario

To put some context first, here’s the scenario.

Something something e-scooter. #DrivingSimulation

— Bertrand Richard (@brifsttar) August 1, 2021

I spent more time making the cinematic than the scenario, and it still looks bad. The scenario took me around an hour though, so that's nice. pic.twitter.com/HZd1n0PxWM

Why did I make it? I wanted to illustrate innovative use cases for our platform, in order for our researchers to get a better grasp at what they could potentially study with those new tools. Historically, most of our simulation work focused on the driving, hence the term driving simulation. But now, we can simulate pretty much any mean of transportation, and any interaction between those. I think researchers could get a lot of interesting ideas and projects if they properly understand the power of their tools, so I hope this small scenario helps them toward that goal.

Scene

The scene is re-used from another project we’re currently working on (SUaaVE), and we’ve already talked about it in a previous post, so I’m not going into too much details here. Basically, we used RoadRunner to create the road network, and then everything above-ground is built in Unreal Engine, using a very wide range of Marketplace products.

E-Scooter

The E-Scooter was bought from a pack of static vehicles, which I then rigged to create a rideable version of it. I also made a small animation blueprint for the rider, which places hands and feet at the right position using two-bone IK.

The e-scooter is moved by its Virtual Driver, though in this instance it’s definitely not an advanced use case, as it’s a simple spline following.

Crowd

The crowd is made out of four different types of pedestrian we currently have available in our platform. Each have their benefits, and mixed together they can give great results. We’ll go over each of them, starting from the most basic to the most advanced.

First, we have some static posed humans, coming from the free Twinmotion Posed Humans 1 pack on the Marketplace. They’re very detailed and have a wide variety of poses.

Then, we have Skeletal Mesh Actors, which come from RenderPeople, and on which we play idle animations. Those mostly come from this pack which was free-for-the-month a while back. These pedestrians are not travelling in the world, but at least they have some basic movement.

The third kind of pedestrian we have is smart enough to move within the world. They’re still using RenderPeople meshes for visuals, but the animation is driven by the Advanced Locomotion System (ALS). To guide them within the crowd, target points are placed on the plaza, and the handy Simple Move to Location takes care of the rest.

And last but not least, our smartest pedestrians can walk along sidewalk. For appearance, we use elements from the Citizen NPC pack, for which we made a small Blueprint that allows us to assemble them easily. For animation, we either use ALS or a simple walking animation. And to follow the sidewalk, we rely on the OpenDRIVE description of the road network, which includes information about sidewalks.

Bus

The bus is an Irisbus Citelis, made by Marsupi3D, who very kindly allowed us to use some of his work for our research. We’re very pleased with this bus, as it is the same exact model that our local transportation agency uses! Meaning we get that “realism” bonus for our experiments’ participants.

I rigged the bus in Blender, including the doors. In Unreal, I then created a matching animation blueprint and vehicle. I also had to create a custom Physics Assets, to allow NPC to get in and out of the bus. For that, I used VMR’s Bus as reference, which I highly recommend.

If you look at the picture above, you can catch a glimpse of the kid, which is inside the bus from the get go. Before making the scenario, I wondered how I would get the kid to move with the bus and then get out. It turns out it couldn’t be simpler: just place the kid on the bus, and he will move with it. No trick required!

The bus is also driven by our Virtual Driver, with basic spline following for lateral control, and our new stop goal, which allows us to accurately stop the bus at the… well, bus stop.

Collision

If you follow my Twitter feed, you may remember that I played with collisions a bit. However, I mostly focused on cars hitting other things.

Still messing around with collision for #DrivingSimulation in #UE4. pic.twitter.com/sM6jyAx3wx

— Bertrand Richard (@brifsttar) June 26, 2021

As it turns out, pedestrian and e-scooter collision is a bit harder to both detect and then simulate. The physics bodies are (relatively) small, complex, and there’s one more to deal with than with car collision: the car’s driver isn’t impacted by the collision, but the e-scooter rider obviously is.

So I cheated a bit, and used a Trigger Box near the collision point, which activates very basic ragdoll physics on all involved actors. The code in itself is straight out of ALS, and it’s more or less a single Blueprint node, so I didn’t spend much brainpower on this.

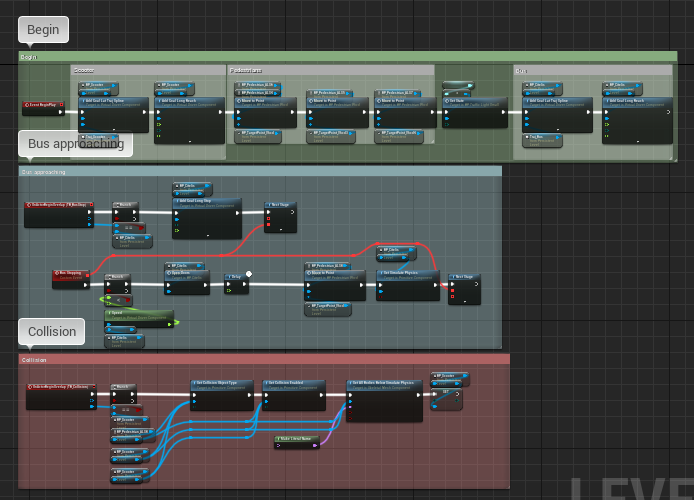

Putting all this together

This scenario took me less than an hour from start to finish. The majority of which was spent trying to figure out how to make the kid move with the bus, only to realize that the easiest solution possible was the right one. Other than that, it’s just dropping lots of pedestrians in the world, drawing some splines and connecting nodes in the Level Blueprint.